Investomation Architecture

Why Making Accurate Chloropleths is Hard

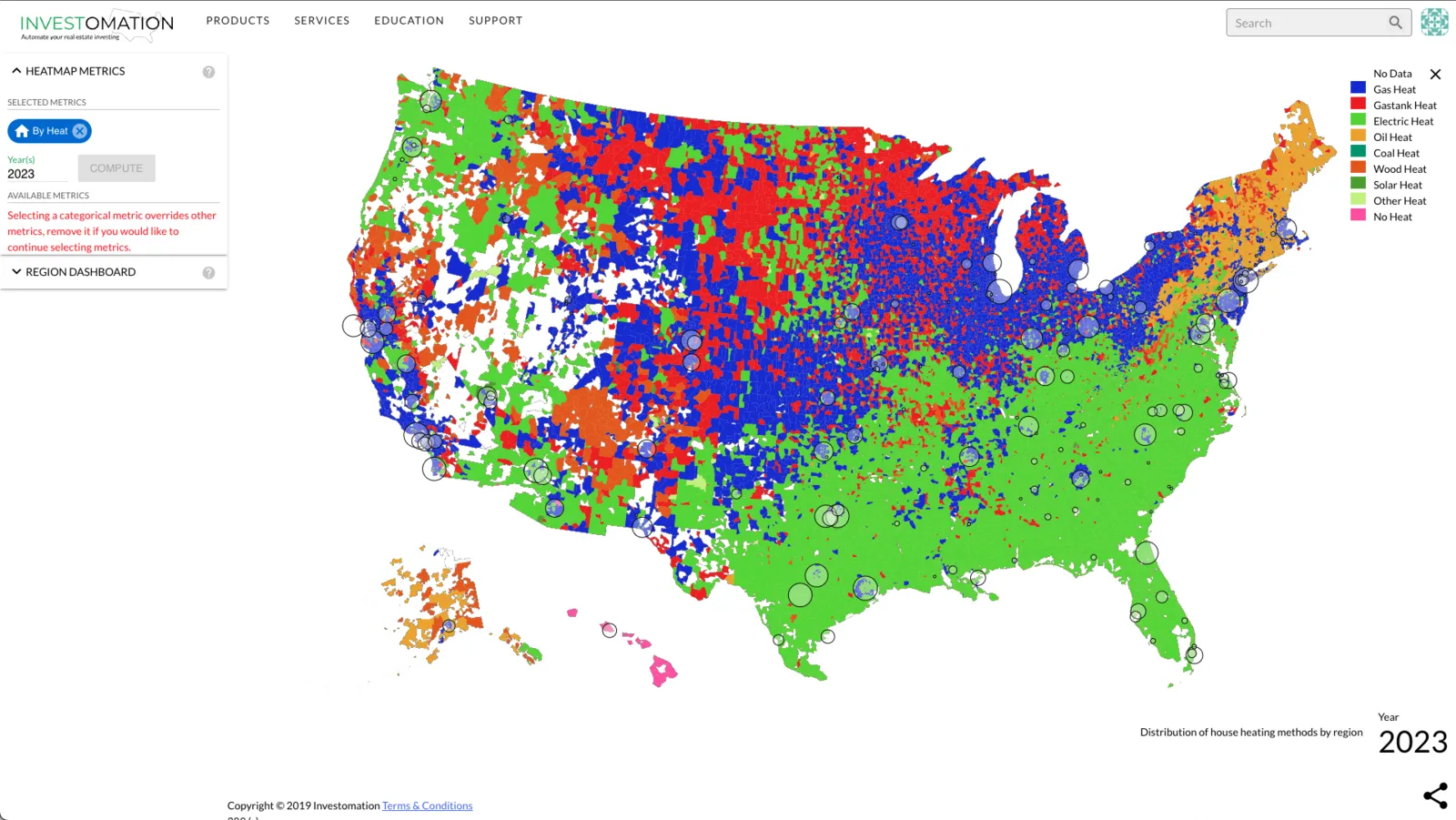

There are plenty of chloropleths online showing any metric you can think of, from election results to demographics. The problem is they either focus on US as a whole and are crude (good enough to see state-level trends, but not those of individual neighborhoods) or they only focus on a specific region/city. Basically, the problem is that they don't scale, and if you try to stitch together data from multiple sources, you'll notice that it often doesn't match up.

There are a ton of nuances when normalizing data and different agencies use different normalization techniques. This is especially apparent when comparing data from different cities, since cities use different methodologies to gather and present their data. Even the agencies tracking this data themselves have been running into this issue. For example, police departments have been voluntarily submitting local crime data to the FBI since the 60s, yet to this day we still don't have an accurate crime data representation from the FBI because the metrics submitted by individual police departments are not consistent. Police departments track crimes differently, and they report them differently.

To make things even more complicated, even the regions themselves are not consistent. Some data is measured in terms of zip codes, other in terms of tracts, other information like school district and voting data use their own regions to organize the data by. The data is there, but it's a mess and organizing it yourself is a full-time job, especially when you focus on multiple markets.

How Investomation Tackles This Problem

This is where Investomation comes in. We've solved the problem of consistency by building our own system for reading information in and normalizing it. The usual sources of information are spreadsheets, other chloropleths/heatmaps, as well as web-scraping. Spreadsheets are easy to feed into the database, but the data must still be curated and cross-verified against existing data. Even Census publishes bad data sometimes that may take them months to detect and fix. Our curation logic has to look for outliers, compare the data against other related metrics to see if it makes sense, and only then can we feed it into our database.

Web-scraped data tends to be more up-to-date but is often full of bad data that must be filtered and normalized before it meets standards. We also have ability to feed other chloropleths into our database. Basically, we sample the image and convert each color to a value. This data tends to be less precise but is a good way to ballpark or cross-verify the accuracy of data from other sources.

Under the Hood

Once the data is cleaned up, it's stored in our database. However, our database is not just a giant flat table. It's a set of layers, each processing its own dimension of data. Some of the layers include:

- Infill: Many regions lack accurate data, this is especially true of rural areas. However, by analyzing historical trends and data in nearby regions that is available, we're sometimes able to fill in the blanks.

- Granularity: Some data is only available on per-county basis, other data doesn't use standard granularity at all. Our database needs to take this data and standardize it, so that it can be compared against other metrics consistently.

- Timeframe: Extrapolating data in time is not as simple as it seems. There are often gaps and outliers, that must be accounted for. A large company opening offices in a rural city may make it look like job growth has skyrocketed in a given year, but this does not imply a growth trend for the area.

- Algorithms: Some metrics are algorithm-based, which means they're computed from several other metrics. The simplest example is population density, which takes the total population for the area and divides it by the land area. I want to eventually expose this feature to the end user as well, as it may be useful to create custom metrics based on the user's specific need (for example, rent/price ratio, or automatically computing sale price/sqft for the area).

Such architecture allows us to add new metrics in a matter of days, once we have the data for them. The architecture is flexible enough to work even with sparce data, and gets better at estimating as more data is fed in.

Additionally, the modular nature of this architecture allows us to change the implementation on per-layer basis. For example, let's say we decide to train a GPT-like neural network to do a better job at predicting trends in rural areas than our existing algorithm. It's not far-fetched to think that an AI trained to see patterns in demographics will do a decent job guesstimating under-represented areas. This will significantly improve the accuracy of the infill layer and benefit the entire system without needing to rebuild other layers. This is what makes Investomation unique.