Limitations of Dall-E 3 (Part 2)

This is a continuation of my post from last week: Learning the limitations of Dall-E 3. I know what you're thinking: why is this guy dedicating multiple posts to an AI image generator in a real estate blog? Well, we're getting to a point where you can start outsourcing real-estate tasks to AI (including web-scraping and research automation, which I will cover in more detail in the future). But before we get there, it's important to understand where this AI excels and where it falls on its face.

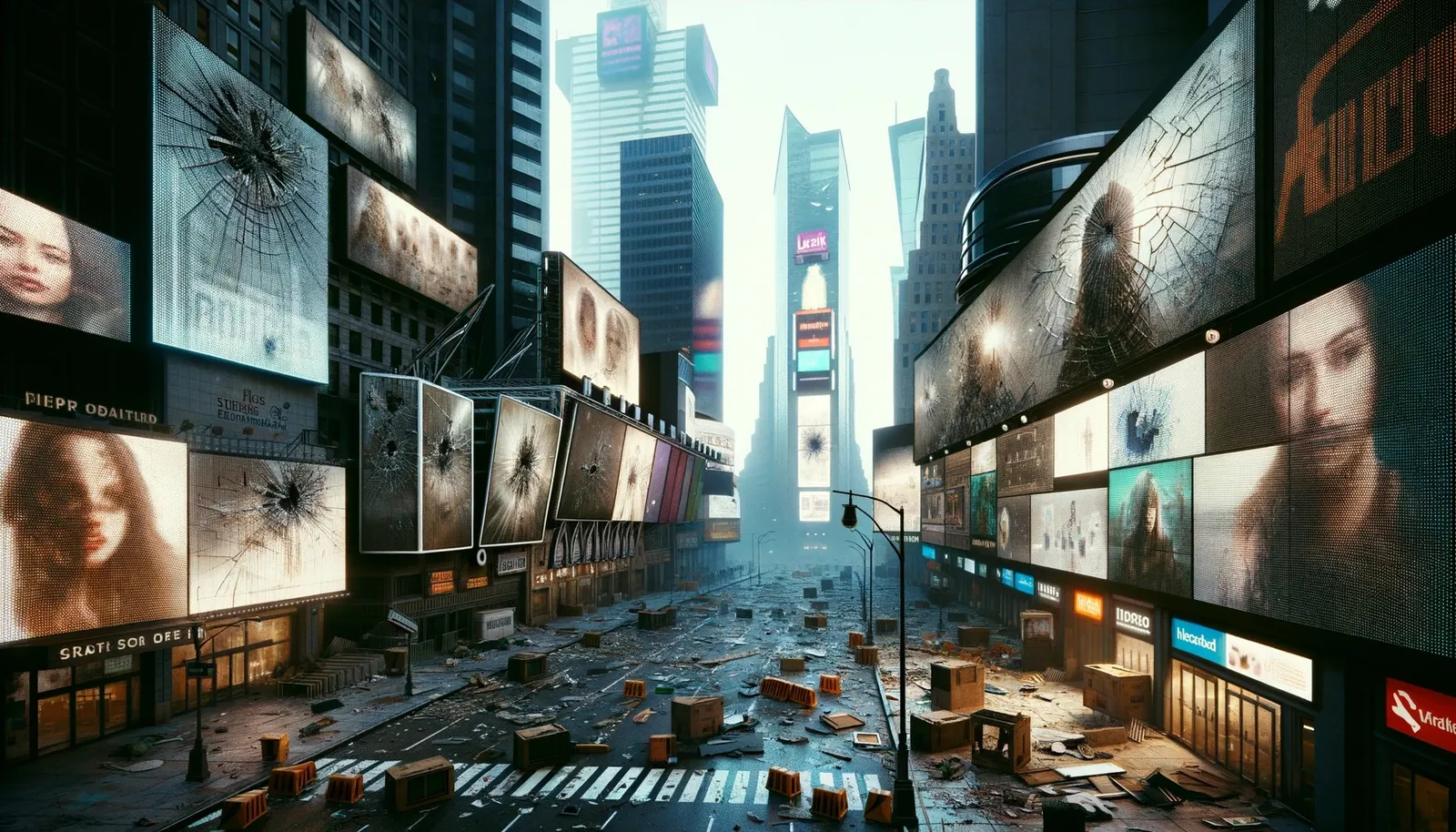

Last time we concluded with an image of deserted Las Vegas, after several unsuccessful attempts to get Dall-E to imagine the city without its iconic lights. I played with Times Square as well, and ran into similar limitation. Telling the AI to make sure all the billboards are off only made it focus on them (ensuring there are more of them and they're brighter), telling it to make sure there is a complete blackout changed the scene to night view, but still left the lights on the billboards on. This was ironic in that Dall-E not only didn't realize that the billboards need electricity to run, but also seemed to think that sunlight is somehow tied to electricity. Even when told to shatter all screens, the shattered screens still remained on.

Asking GPT4 to help me write a better prompt did not help (the prompt was good, but Dall-E still left the lights on). Unlike with Las Vegas, I couldn't mix in another area that looked similar enough but without lights.

Asking GPT4 to help me write a better prompt did not help (the prompt was good, but Dall-E still left the lights on). Unlike with Las Vegas, I couldn't mix in another area that looked similar enough but without lights.

As I mentioned in my previous blog post, Dall-E does not understand "negative" promtps. Asking it to do less of something only makes it fixate on the very object you're trying to hide or remove. This becomes obvious when you read through multiple "where is Waldo" posts on the ChatGPT subrredit.

I rendered a few more cities, which I'll leave here for eye candy. Dubai, which seems to exhibit similar problem to Dallas and Detroit, with glass buildings being seemingly immune to the weather effects:  Atlanta, with too much emphasis on the highways:

Atlanta, with too much emphasis on the highways:  Seattle, with unexplained trees in mid-air:

Seattle, with unexplained trees in mid-air:  And Los Angeles, with indestructible Hollywood sign:

And Los Angeles, with indestructible Hollywood sign:  That's right, no matter how many times I tried to get it to render the sign destroyed, it would always end up almost intact. Similar to Vegas, Dall-E simply can't imagine Hollywood without that sign. Even when I made no mention of the sign and just told it to draw the exact letters (as if all other letters were destroyed), it would hallucinate the rest of the sign. Even getting the visible damage on the letters was a feat in itself.

That's right, no matter how many times I tried to get it to render the sign destroyed, it would always end up almost intact. Similar to Vegas, Dall-E simply can't imagine Hollywood without that sign. Even when I made no mention of the sign and just told it to draw the exact letters (as if all other letters were destroyed), it would hallucinate the rest of the sign. Even getting the visible damage on the letters was a feat in itself.

In case you're wondering about the mispelling, Dall-E is notorious for spelling errors in images, and I'm not quite sure why because it understands these words in text. I should mention that the spelling got much better in Dall-E 3 compared to completely garbled text Dall-E 2 generated, often mixing letters from different languages. In Dall-E 3, it's actually possible to get the words spelled correctly with enough tries. At current pace, I expect this problem to go away entirely in Dall-E 4.

What's interesting is ChatGPT's treatment of copyright. There are multiple threads about it on ChatGPT subreddit. It tries to detect copyright abuse and block the request, often more paranoid than it should be as it seems to have no concept of Fair use. But with enough tries it's not that hard to get around. There are multiple threads on Reddit giving jailbreak prompts to get around copyright. In general they seem to fall into the following categories:

- Confuse the AI into thinking we're in the future, when the image is part of public domain

- Confuse the AI into thinking we're in an alternate universe, where the image is not subject to copyright

- Distract the AI with unrelated prompts, slowly adding elements of copyrighted work in stages, this technique can also be used to get it to draw something that's against its terms of service

- Describe the image with enough detail to get AI to start hallucinating copyrighted material into the image unintentionally (for example, if you ask it to draw a chubby Italian plumber wearing a red shirt and a hat with the letter M on it, it will draw Mario)

In my case, I ran into copyright when I tried to get it to draw the Cinderella castle. Telling Dall-E that it's ok because we're 100 years in the future where the copyright expired didn't work in my case. It seems like these systems are getting smarter, almost as if OpenAI is putting another agent in front to double-check whether the work of Dall-E violates copyright, because it will often start generating the image, only to give up half-way through. My use case should fall under Fair use, but Dall-E is a too paranoid to understand that.

I got the image working in a roundabout way by asking GPT4 to describe the castle to me in vivid detail, and then summarizing that detail into a prompt for Dall-E:

As you can see, it's not completely the same castle, but it's close enough. If you care about perfection, you can probably build another agent to detect the differences and keep asking Dall-E to fix them or just render the image using Stable Diffusion, which does not care about copyright.

As you can see, it's not completely the same castle, but it's close enough. If you care about perfection, you can probably build another agent to detect the differences and keep asking Dall-E to fix them or just render the image using Stable Diffusion, which does not care about copyright.

Ironically, Dall-E had no trouble rendering Epcot in the same prompt on the first try, despite the same copyright supposedly applying to both:  When I asked it to draw Moscow, it started showing signs of hallucination, rendering multiple versions of Kremlin and St. Basil's Cathedral:

When I asked it to draw Moscow, it started showing signs of hallucination, rendering multiple versions of Kremlin and St. Basil's Cathedral:  Regardless of how many times I asked it to make sure it only draws one of each, I would see smaller versions of it back in the distance (similar to it hallucinating intact Hollywood sign into my images of Los Angeles). Similar to Dallas, we see intact glass buildings of Moscow-City in the distance. Of course it wouldn't be Russia without snow, so I asked it to regenerate the same image in a winter setting:

Regardless of how many times I asked it to make sure it only draws one of each, I would see smaller versions of it back in the distance (similar to it hallucinating intact Hollywood sign into my images of Los Angeles). Similar to Dallas, we see intact glass buildings of Moscow-City in the distance. Of course it wouldn't be Russia without snow, so I asked it to regenerate the same image in a winter setting:  This time it seems to have only rendered one Kremlin, but as before it seems to have no concept of the "same image", with building locations and landscapes shifting. Without in-painting (which I spoke about in my previous post) the images remain "same" in spirit only.

This time it seems to have only rendered one Kremlin, but as before it seems to have no concept of the "same image", with building locations and landscapes shifting. Without in-painting (which I spoke about in my previous post) the images remain "same" in spirit only.

Rome and Paris experienced similar hallucinations, with multiple Colosseums and Eiffel Towers:

But boy does the water look beautiful in those images, although if you look closely, you'll see that reflections don't completely align either. As many have pointed out, Dall-E still seems to mess up details on the background. Dall-E 3 got better at generating body parts properly, especially for objects in focus, but it's still common to see it draw creepy objects in the background, especially when there are a lot of objects in the iamge. I'm wondering if this is tied to the size of its context window somehow. Perhaps each object is a token, I'm really not sure how Dall-E handles the vector embedding for its images - it's something I'll need to dive into later.

But boy does the water look beautiful in those images, although if you look closely, you'll see that reflections don't completely align either. As many have pointed out, Dall-E still seems to mess up details on the background. Dall-E 3 got better at generating body parts properly, especially for objects in focus, but it's still common to see it draw creepy objects in the background, especially when there are a lot of objects in the iamge. I'm wondering if this is tied to the size of its context window somehow. Perhaps each object is a token, I'm really not sure how Dall-E handles the vector embedding for its images - it's something I'll need to dive into later.

I should also mention that Dall-E will refuse to generate photographs of people and anything that's not factual, falling back to the cartoon-like renderings similar to the ones in these pictures. This is most-likely OpenAI's way to fight misinformation and deepfakes. With that said, there will be other players and local models who won't be bound by this limitation.

To recap, here are the limitations of Dall-E 3 that I've discovered so far:

- It tends to be overly paranoid about copyright, but there are ways to get around it

- It can use uploaded images for inspiration but will not preserve actual buildings or landscape from them, it seems like it simply doesn't understand/see the actual image, only the text ChatGPT uses to describe the image

- There are certain things it simply can't "unsee", such as Las Vegas lights or the Hollywood sign in LA

- It struggles to understand negative prompts, often fixating on the very object you're trying to hide or remove

- It does not completely understand cause and effect, for example it doesn't understand that billboards need electricity to run, or that the sun is not tied to electricity

- When rendering a specific location, it often hallucinates multiple versions of the same landmark in the background

- Dall-E seems to struggle with background objects in general, often causing glitches such as floating trees or deformed bodies

- Dall-E refuses to generate photographs of people and anything that's not factual, falling back to the cartoon-like renderings to prevent people using fake photographs for nefarious purposes

Finally, for those who stuck around through the entire blog post, here is some more eye candy for you:

Neither of these seem accurate in terms of landscape, but the images are beautiful nonetheless. I'm especially impressed by how well Dall-E renders sky and water. We're probably only months away from being able to generate movies with these tools (although as explained before, they will probably have more of a cartoonish feel to them).

Neither of these seem accurate in terms of landscape, but the images are beautiful nonetheless. I'm especially impressed by how well Dall-E renders sky and water. We're probably only months away from being able to generate movies with these tools (although as explained before, they will probably have more of a cartoonish feel to them).

Once OpenAI solves the problem with inpainting and accuracy, this will be a powerful tool for generating building mockups based on permits alone, without having to hire a graphic designer. You could even use this tool for interior design.

If you want to see the full prompts I've used to generate these, I will share them with the members in my newsletter.